Headlines are currently dominated by record-breaking heatwaves, catastrophic flooding, massive wildfires, and other extreme weather patterns in many regions. Responding to the climate crisis requires unprecedented actions and new forms of cooperation by all parts of society. This is true as well with respect to another threat, which some scientists point to as being an equal if not more urgent existential risk than climate change, nuclear war, or a global pandemic – namely, uncontrolled advances in Artificial Intelligence (AI) technologies.

AI is commonly understood as an emerging field which combines computer science with enormous amounts of data, bringing about rapid breakthroughs in machine learning. AI isn’t new, but mass attention to its potential dangers and uses grew notably in late 2022 with the release of OpenAI’s ChatGPT tool.

But what exactly is it about AI that should worry us? How should governments, businesses and other stakeholders be working together to prevent AI harms and maximise benefits for all, and how should international human rights principles and standards be part of the governance of this emerging domain?

Confronting risks, maxmising benefits

To start, we should remember that AI is already everywhere, from the underlying algorithms that make automatic translation services possible, to how digital media providers track our online preferences and provide us with content most relevant to our interests. Experts stress that current AI capabilities are still in their infancy, compared to what will be possible in the years ahead. Machines learn, quickly, and get better. The question is: better at what?

A host of AI risks must be confronted, from disinformation and threats to privacy, reinforcement of and potential weaponisation of biases, to widespread discriminatory outcomes and job losses, among others. At the same time, AI holds enormous potential for our world if responsibly deployed.

Will governments respond?

Some governments are taking steps to catch up with technological developments. Last week, the Biden administration took its latest initiative in this area by announcing voluntary commitments by seven US based AI companies to ensure their products are safe, secure and worthy of public trust. Commentators are noting the new agreement could serve as a first step towards government regulation, or could instead be a way for companies to set their own rules for the industry.

Meanwhile, the European Union is further ahead with the European Parliament last month adopting the Artificial Intelligence Act, aimed at making AI “safe, transparent, traceable, non-discriminatory and environmentally friendly.” The proposed rules will now be considered by the Council of the EU, which is also in the final stages of approving a new directive establishing rules for corporate sustainability due diligence, which will require companies to improve systems of accountability and transparency throughout their supply chains.

At the global level, the UN Security Council last week entered the AI debates for the first time, holding a discussion on how to respond to peace and security implications of these new technologies.

The UN Secretary-General warned that malicious deployment of AI systems by terrorists, or for other state or private purposes, could cause widespread physical and psychological harms to people in all countries. He urged governments to create a new UN based body focused on preventing risks, and managing future international agreements on AI monitoring and governance.

Joint action in a time of division

It is noteworthy that in the days before the Security Council session, the UN’s International Telecommunication Union (ITU) hosted an AI for Good Summit, designed to promote AI’s positive role in addressing public health challenges, gender disparities, environmental protection, and other sustainable development priorities.

All of these examples illustrate the fluid nature of the AI agenda, along with the many uncertainties and complexities inherent in any future regulations or international governance. Expanded international cooperation is clearly needed as the AI field continues to develop, but during a time when divisions between governments are wider, and the multilateral system itself faces serious threats, opportunities for joint action are limited.

The Secretary-General’s stark remarks to the Security Council were in line with experts calling for an “AI pause”, that would envision governments jointly agreeing to prohibit uncontrolled AI developments in specific areas, a step seen by many as unlikely at present. For their part, some companies are urging governments to regulate the industry, although debates on the best way forward remain contentious. Relying solely on elected officials, who barely understand the technology, to regulate AI poses its own risks.

Public-private governance structures are needed involving governments, businesses, investors, unions, experts, and civil society organisations active in the AI field, to build awareness of AI risks and opportunities, and foster innovative and inclusive forms of dialogue. And they should ensure that existing international standards aren’t neglected as they work to develop the path ahead.

Human rights should be central to AI’s future

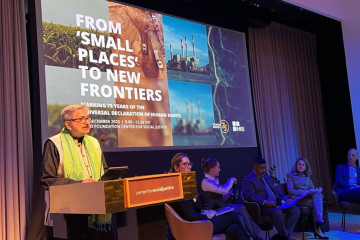

For all the talk of the importance of “ethics” and “values” in regulating AI, the international human rights framework has to date not featured prominently in state and business led initiatives in this area. That’s a real oversight in this 75th anniversary year of the Universal Declaration of Human Rights.

The good news is that human rights focused standards, guidance and proposals are available as starting points for further work in this area. These include the UN’s Educational, Scientific and Cultural Organization (UNESCO) 2021 recommendations addressed to governments on promoting business responsibility throughout the AI system life cycle. A 2021 report of the UN Human Rights Council Advisory Committee offers a useful overview of impacts, opportunities and challenges of emerging digital technologies, and the rights implications of AI. and points to early examples of good practice that can be built upon.

Academic institutions and non-profit organisations focused on AI research and fairness like the Ethics of AI Lab, the Centre for the Governance of AI, and AI for Good among others are also providing useful recommendations. More can be done now to combine these efforts with human rights and corporate responsibility analysis and frameworks, as is seen in the newly released NYU Stern Center for Business and Human Rights report addressing the risks of “Generative AI”. This year’s annual UN Forum on Business and Human Rights will include a session on applying the lens of the UN Guiding Principles on Business and Human Rights to this challenge.

Our own work at IHRB on AI developments is focused on developing constructive dialogue with companies developing and using these tools, as well as with experts working on how to address the many risks involved. We’ll be publishing our own views on the way forward in the coming months.

AI is a frontier human rights challenge. Progress will require the creativity and commitment of many actors in society if we’re to ensure it becomes a force for good, built on responsibility and accountability, able to help solve other existential crises, not become one itself.

The perception of ‘value’ needs to change if the World Bank’s mission is to succeed

Last week we attended the Spring Meetings of the World Bank and International Monetary Fund (IMF) in Washington, D.C. The annual IMF-World Bank meetings bring together finance ministers and central bankers from all regions as a platform for official...

26 April 2024 | Commentary

Commentary by Vasuki Shastry, Author, ESG/Strategic Communications Expert; International Advisory Council, IHRB Haley St. Dennis, Head of Just Transitions, IHRB